How Structured Light and AI Are Shaping the Future of Communication

Structured light technology, enhanced by spatial dimensions and machine intelligence, boosts information transmission and detection. Researchers have achieved significant advancements in data encoding and transmission, using spatial nonlinear conversion to maintain low error rates and high accuracy under challenging conditions. Credit: Zilong Zhang, Wei He, Suyi Zhao, Yuan Gao, Xin Wang, Xiaotian Li, Yuqi Wang, Yunfei Ma, Yetong Hu, Yijie Shen, Changming Zhao

Structured light enhances information transmission by combining advanced image processing with machine learning, achieving high data capacity and accuracy in innovative experiments.

Structured light has the potential to greatly increase information capacity by integrating spatial dimensions with multiple degrees of freedom. Recently, the fusion of structured light patterns with image processing and artificial intelligence has demonstrated strong potential for advancement in areas like communication and detection.

One of the most notable features of the structured light field is the two and three-dimensional distribution of its amplitude information. This feature can effectively integrate with maturely developed image processing technology and can also achieve cross-medium information transmission by virtue of machine learning technology currently driving profound changes. Complex structured light fields based on coherent superposition states can carry abundant spatial amplitude information. By further combining spatial nonlinear conversion, significant increases in information capacity can be realized.

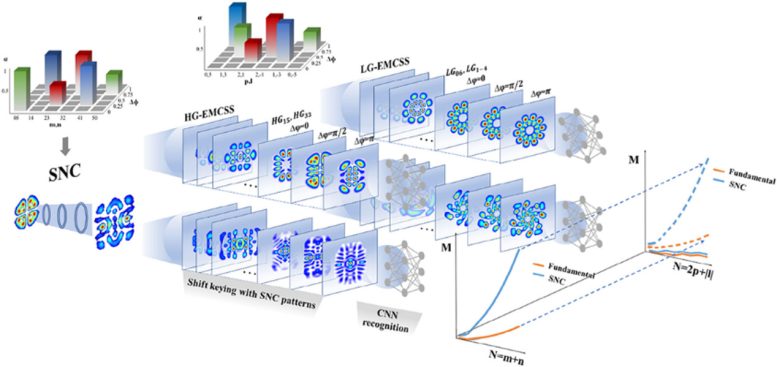

Complex structured light from nonlinear conversion has higher information capacity. Credit: Zilong Zhang, Wei He, Suyi Zhao, Yuan Gao, Xin Wang, Xiaotian Li, Yuqi Wang, Yunfei Ma, Yetong Hu, Yijie Shen, Changming Zhao

Zilong Zhang from Beijing Institute of Technology and Yijie Shen from Nanyang Technological University, along with their teams’ members, proposed a new method for enhancing information capacity based on complex mode coherent superposition states and their spatial nonlinear conversion. By integrating machine vision and deep learning technologies, they achieved large-angle point-to-multipoint information transmission with low bit error rate.

In this model, Gaussian beams are used to obtain spatial nonlinear conversion (SNC) of structured light through a spatial light modulator. Convolutional neural networks (CNN) are used to identify the intensity distribution of the beams. By comparing the basic superposition mode and the SNC mode, it is observed that with the increase in order of constituent eigenmodes of the basic mode, the encoding capability of HG superposition mode is significantly better than LG mode, and mode encoding capacity after spatial structured nonlinear conversion can be significantly improved.

Verification of Encoding and Decoding Performance

To verify the encoding and decoding performances based on the above model, a 50×50-pixel color image was transmitted, shown in Fig.1. The RGB dimensions of the image were divided into 5 chromaticity levels, comprising a total of 125 kinds of chromaticity information, each encoded by 125 HG coherent superposition states. Additionally, different degrees of phase jitter caused by atmospheric turbulence were loaded onto these 125 modes through a DMD spatial light modulator and trained with deep learning technology to form a dataset.

Further using nonlinear conversion, the analysis of higher capacity decoding effects was implemented, in which 530 SNC modes were selected for experimental measurement of the confusion matrix to these modes by convolutional neural networks, shown in Fig.2. The experimental findings indicate that due to more distinct structural features, SNC modes can still ensure similarly low bit error rates while significantly increasing data capacity, with a data recognition accuracy up to 99.5%. Additionally, the experiment also verified the machine vision pattern recognition capability under conditions of diffuse reflection, achieving simultaneous high-precision decoding by multiple receiving cameras with observation angles up to 70°.

Reference: “Spatial Nonlinear Conversion of Structured Light for Machine Learning Based Ultra-Accurate Information Networks (Laser Photonics Rev. 18(6)/2024)” by Zilong Zhang, Wei He, Suyi Zhao, Yuan Gao, Xin Wang, Xiaotian Li, Yuqi Wang, Yunfei Ma, Yetong Hu, Yijie Shen and Changming Zhao, 09 June 2024, Laser & Photonics Reviews.

DOI: 10.1002/lpor.202470039

Funding: National Natural Science Foundation of China, Nanyang Technological University and Singapore Ministry of Education (MOE) AcRF Tier 1 grant